Content

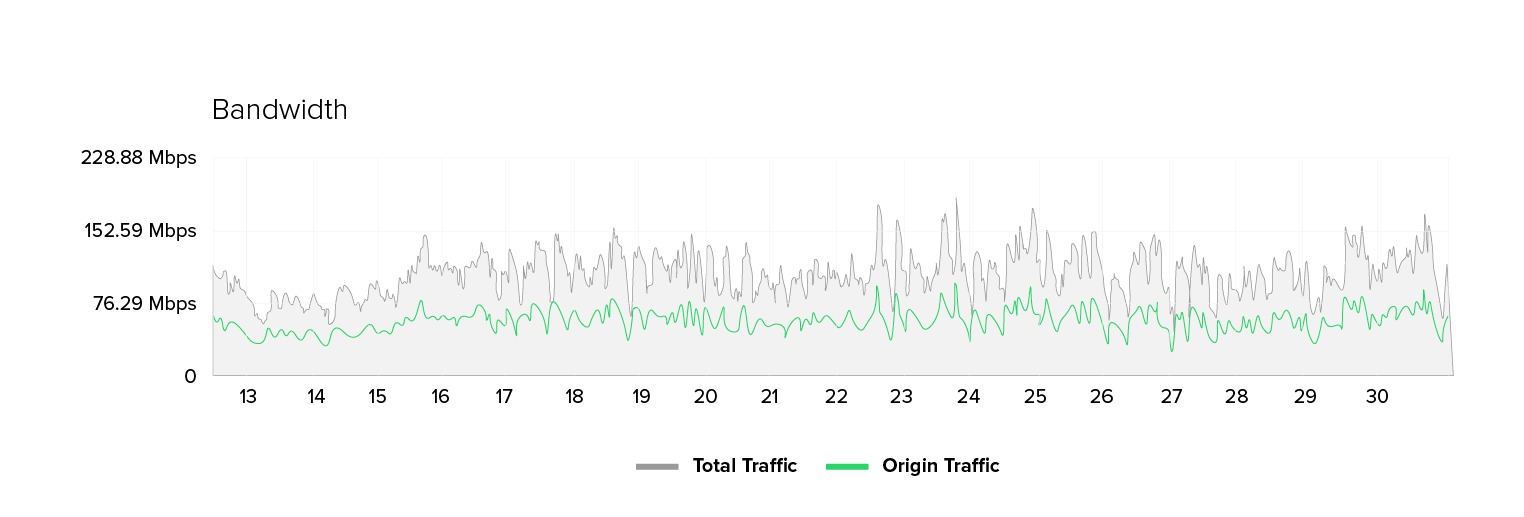

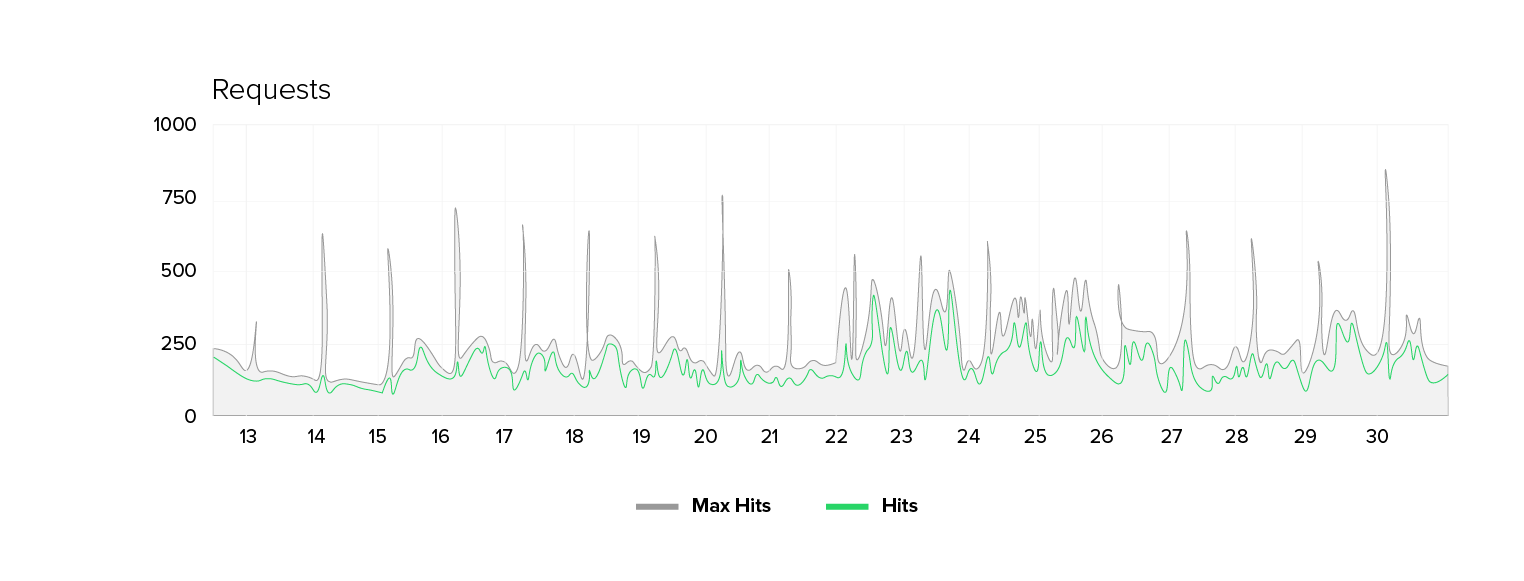

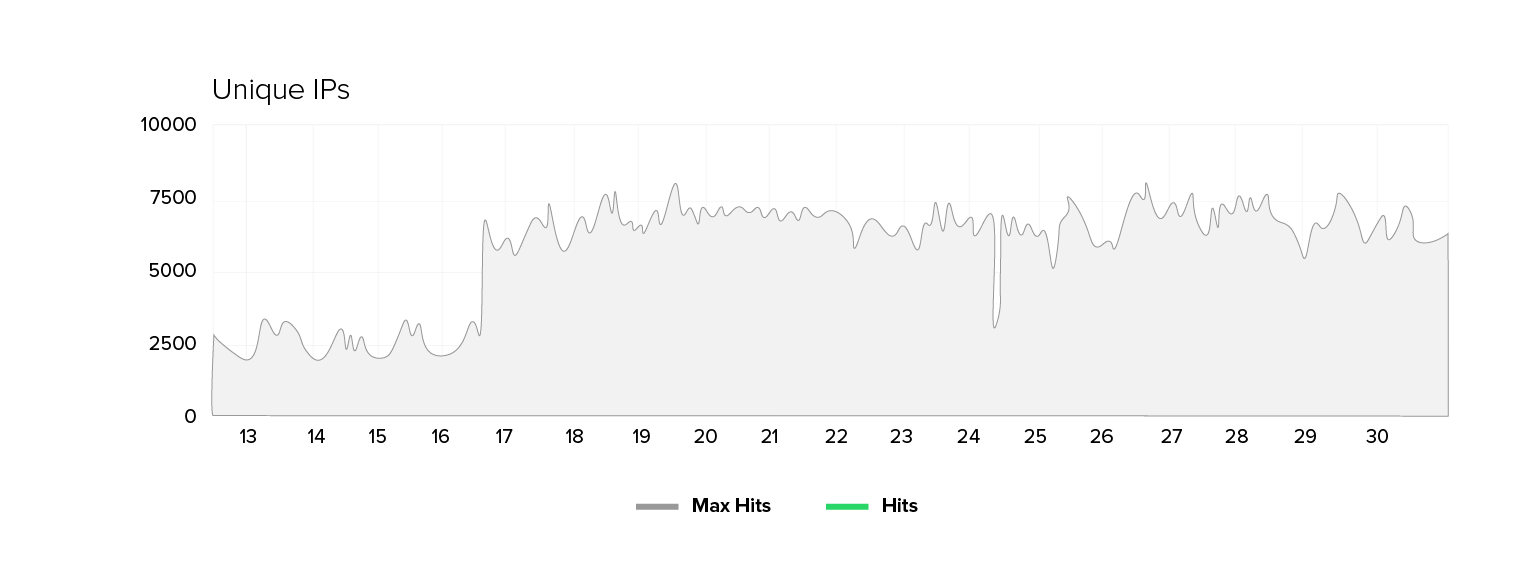

We have been observing an unusual pattern in the IT environment of a public sector customer for quite some time now. The number of unique IP addresses has skyrocketed from 2,000 to 2,500 during peak times to a regular range of 5,000 to 8,000. One would expect a proportional increase in requests and required bandwidth with this doubling of visitor numbers. However, bandwidth and request volume are increasing only moderately (by a factor of 1.3 to 1.7), while the number of unique IPs remains virtually constant.

This is more than a minor statistical detail. It points to a bot-driven pattern with an unexpected structure.

What does this phenomenon look like?

Since the cutoff date, the customer has experienced a sustained increase in unique IP addresses that is not short-lived.

The metrics at a glance: The number of unique IPs has tripled in some places. Yet, the number of requests is increasing by only 50 to 70 percent, and the bandwidth just by 30 to 70 percent. At the same time, we find that the origin server’s response time deteriorates. There are higher average values, more peaks, and occasional 500 errors. Notably, there are no signs of massive content downloads (e.g. images or scripts), and the shift in website traffic profiles is subtle and partly contradictory.

Why is this discrepancy noticeable?

When the number of real users increases, the volume of requests and bandwidth typically rise proportionally. A browser loads not only HTML pages, but also images, fonts, stylesheets, scripts, and videos. This results in a significantly higher load per visit.

Bots behave differently. They are usually interested in the HTML – the “raw text” of the page – and ignore everything necessary for visual rendering.

This explains why the number of unique IP addresses increases dramatically without the bandwidth increasing at the same rate.

- Many bots generate little data per request,

- But when thousands act simultaneously, the result is in a very high connection density.

Although bots that only load HTML generate minimal traffic compared to real browsers, when thousands of them act simultaneously, they can have a significant impact on server resources.

Technical anomalies

- High number of unique IP addresses and moderate bandwidth: The number of unique IP addresses increases by a factor of 2-3 while the bandwidth only increases by a factor of 1.5–1.75.

- Increased server latencies: Origin response times are higher on average and show significantly more peaks.

- Request mix: POST requests increase from around 1% to around 3%, indicating form/search engine abuse.

- Source profiles: There is an increase in traffic from infrastructure/cloud providers instead of distribution via end-customer ISPs.

- Geographical anomaly: A large proportion of Iranian requests originate from a single ISP almost entirely. It is unusual for one provider to account for 99% of a country’s internet traffic.

- No clear error waves: Moderate changes in the 500 and redirect rates indicate that there is no complete system failure.

Plausible explanations based on the data

The combination of many unique IP addresses and a relatively small increase in bandwidth is consistent with the hypothesis of a botnet primarily sending Spartan HTTP requests, such as search queries or form submissions.

Possible Variants:

- Compromised Servers/Cloud VMs: A volume of traffic from infrastructure providers indicates that there are many powerful servers, not just IoT devices, as sources. These hosts can generate numerous requests with minimal overhead.

- End-user bot activity in specific countries: Highly concentrated traffic from a single country may indicate targeted proxies or orchestrated action.

- Slow or “connection-heavy” attacks: Even if there are no classic Slowloris attacks, a similar effect can occur. Many simultaneous, short-lived connections can exhaust server resources. Modern browsers optimize connections using keep-alive, multiplexing, and session reuse. This results in less overhead per request. Simple bots, instead, establish a new TCP connection for each request. Each time, the server must perform the complete connection setup, including the handshake and TIME_WAIT phase.

The result is thousands of short-lived connections that transfer very little data but still consume significant resources.

Even a simple botnet can achieve the effect of a Slowloris attack simply through the sheer number of connections.

Why browsers and bots behave so differently

Real browsers are “cooperative.” They keep connections open, bundle requests, use HTTP/2 multiplexing, and efficiently process content. Bots, on the other hand, open many small sessions, often without keep-alive or compression, and only request bare HTML pages.

This results in a high volume of connections with low bandwidth and thus, which matches exactly what we see in the customer’s logs. It is of note that many of these bots do not retrieve images or scripts, nor do they interact with forms or cookies. This confirms that these are not real users but automated crawlers or attack scripts that systematically scan endpoints or perform stress tests.

What is missing so far and why it is important

Current monitoring data only provides a trend data. It lacks details about the behaviour of individual connections. For example, it is unclear whether the requests were short-lived or long-lasting, how much data was transferred per session, and whether the clients behaved like humans or machines.

Understanding this requires detailed network observation, i.e., collecting metrics that record individual sessions, request types, and response times. Additionally, short traffic recordings (packet captures) or higher-resolution flow data help identify patterns and repetitions.

Only then can one determine with certainty whether the activity is the result of coordinated bots, misconfigurations, or targeted stress tests. Bot management systems or web application firewalls (WAFs) could help, as they recognize bot behavior based on characteristics such as JavaScript activity, browser behaviour, and cookie usage.

This is essential to reliably distinguish human access from automated traffic.

Contact our experts and find out how you can remove harmful bots without blocking helpful bots. A proven Bot Management can help you with that.

Recommended immediate measures

The following pragmatic steps are recommended to stabilize the situation and gain insights:

- Bot Management and Web Application Firewall (WAF): Use a bot management tool or, as an interim solution, a WAF with rate limits and challenge mechanisms. CAPTCHAs for forms and JavaScript checks for search endpoints quickly reduce automated traffic.

- More detailed network monitoring: Activate session-based logs and packet captures to analyze individual request behaviour.

- Rate limits and rate-based rules: Enforce rate limits on search and contact endpoints, as well as connection timeouts and keep-alive limits.

- Provider contact: Identify Internet Service Providers (ISPs) that deliver unusually high amounts of traffic and submit abuse tickets.

- Content delivery and scrubbing: Determine if CDN or scrubbing services can absorb particularly active IPs or high-load sources (bots or servers) at short notice.

Why resilience must be learned now

This case represents a new hybrid bot phenomenon. It combines a high number of sources, low bandwidth per request and sustained stress on dynamic endpoints over a period of weeks. Classic threshold alarms are insufficient, and an adaptive monitoring and protection concept is required.

This incident demonstrates that network resilience is not achieved through rigid rules. It requires the ability to recognize behavioural patterns, trigger automated countermeasures and adapting to them dynamically.

Contact us if you want to understand how resilient your services are against such subtle bot activities. We support you with analysis and design of protection strategies, so your infrastructure remains stable even against quiet but persistent attacks.